I recently asked Corey Harrell about any unfulfilled programming ideas he might have and he told me about his idea for a RegRipper Plugins maintenance Perl script.

He was after a script that would go into the plugins directory and then tell the user what plugins were missing from the various "Plugins" text files. Note: I am using "Plugins" to refer to the "ntuser", "software", "sam", "system", "security" and "all" text files. These contain a list of Perl (*.pl) plugins that a user can use to batch run against a particular hive.

He also mentioned that it might be handy to be able to exclude certain files (eg malware plugins) from being considered so if a user has deleted certain entries, they aren't constantly being reminded "Hey! There's a difference between what's in the "Plugins" file and what's actually available (ie the *.pl plugins)".

UPDATE: This script was not intended to be used to blindly load every possible *.pl plugin into their relevant "Plugins" text file. It was intended for use as a diagnostic tool "What (*.pl) plugins are missing from my current "Plugins" file?" (eg "ntuser"). Users can then look at the missing plugins list and determine if they should/should not include them in their "Plugins" text file.

UPDATE: Script ("PrintMissingPlugins" function) has been updated so commented-out entries (eg #ccleaner) in "Plugins" text files are not used in comparisons for missing *.pl plugins. Thanks Francesco!

UPDATE: Script ("PrintMissingPlugins" function) has been updated so blank lines in "Plugins" text files are not used in comparisons for missing *.pl plugins Have also updated the Code and Testing sections.

So let's see how we can implement Corey's idea ...

The Code

# CODE STARTS ON LINE BELOW

#!/usr/bin/perl

# Note: I removed the "-w" above because the eval/require/getHive section was generating numerous variable masking warnings

# This shouldn't affect us as we only call the plugin's getHive method.

# Perl script to list updates for RegRipper plugin files (ntuser, software, sam, system, security, all)

# Original Idea by Corey Harrell

# Coded by cheeky4n6monkey@gmail.com

# Note: Probably best if you do NOT run this script from the RegRipper Plugins directory

# as it could affect the *.pl plugin processing.

# Created (2012-04-02)

# Modified (2012-04-03) for handling "#" commented out lines in "Plugins" text files (Thanks Francesco!)

# Modified (2012-04-04) for handling blank lines in "Plugins" text files (Thanks Francesco!)

# Modified (2012-04-04) re-instated "No missing plugins message"

use strict;

use Getopt::Long;

use File::Spec::Functions qw/catfile/;

my $version = "regripper-maint.pl v2012-04-04";

my $help = 0;

my $plugindir = "";

my $excludefile = "";

my @pluginslist;

GetOptions('help|h' => \$help,

'dir=s' => \$plugindir,

'x=s' => \$excludefile,

'p=s@' => \@pluginslist);

if ($help || @pluginslist == 0 || $plugindir eq "")

{

print("\nHelp for $version\n\n");

print("Perl script to list discrepancies for RegRipper Plugin files (ntuser, software, sam, system, security, all)\n");

print("\nUsage: regripper-maint.pl [-h|help] [-dir plugins_dir] [-x exclude_files] [-p plugins_list]\n");

print("-h|help ............ Help (print this information). Does not run anything else.\n");

print("-dir plugins_dir ... Plugins directory.\n");

print("-x exclude_file .... Exclude text file which lists plugins to exclude from any checks.\n");

print("-p plugins_list .... Plugins file(s) to be checked (eg all, ntuser).\n");

print("\nExamples: \n");

print("regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -p ntuser -p sam\n");

print("regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -x regripper-maint-exclude -p all\n\n");

exit;

}

print "\nRunning $version\n\n";

# List of plugins derived from the default (ntuser, software, sam, system, security, all) plugins text files

my @ntuser_plugins;

my @software_plugins;

my @sam_plugins;

my @system_plugins;

my @security_plugins;

my @all_plugins;

# Directory file listing of *.pl files in user's plugins directory

my @readinplugins;

# @readinplugins list broken up by hive

my @ntuser_actual;

my @software_actual;

my @sam_actual;

my @system_actual;

my @security_actual;

my @all_actual;

my @excludelist;

# Extract entries from user nominated exclude file

if (not $excludefile eq "")

{

open(my $xfile, "<", $excludefile) or die "Can't Open $excludefile Exclude File!";

@excludelist = <$xfile>; # extract each line to a list element

chomp(@excludelist); # get rid of newlines

close($xfile);

foreach my $ig (@excludelist)

{

print "Ignoring the $ig plugin for any comparisons\n";

}

print "\n";

}

# Read in the entries in the default Plugins text file(s)

# Plugin files have lowercase names

foreach my $plugin (@pluginslist)

{

open(my $pluginsfile, "<", catfile($plugindir,$plugin) ) or die "Can't Open $plugin Plugins File!";

if ($plugin =~ /ntuser/)

{

print "Reading the ntuser Plugins File\n";

@ntuser_plugins = <$pluginsfile>; # extract each line to a list element

chomp(@ntuser_plugins); # get rid of newlines

}

if ($plugin =~ /software/)

{

print "Reading the software Plugins File\n";

@software_plugins = <$pluginsfile>;

chomp(@software_plugins);

}

if ($plugin =~ /sam/)

{

print "Reading the sam Plugins File\n";

@sam_plugins = <$pluginsfile>;

chomp(@sam_plugins);

}

if ($plugin =~ /system/)

{

print "Reading the system Plugins File\n";

@system_plugins = <$pluginsfile>;

chomp(@system_plugins);

}

if ($plugin =~ /security/)

{

print "Reading the security Plugins File\n";

@security_plugins = <$pluginsfile>;

chomp(@security_plugins);

}

if ($plugin =~ /all/)

{

print "Reading the all Plugins File\n";

@all_plugins = <$pluginsfile>;

chomp(@all_plugins);

}

close $pluginsfile;

}

# This code for determining a package's hive was cut / pasted / edited from "rip.pl" lines 42-67.

# Reads in the *.pl plugin files from the plugins directory and store plugin names in hive related "actual" lists

# Note: the "all_actual" list will later be a concatenation of all of the "actual" lists populated below

opendir(DIR, $plugindir) || die "Could Not Open $plugindir: $!\n";

@readinplugins = readdir(DIR);

closedir(DIR);

foreach my $p (@readinplugins)

{

my $hive;

next unless ($p =~ m/\.pl$/); # gonna skip any files which don't end in .pl

my $pkg = (split(/\./,$p,2))[0]; # extract the package name (by removing the .pl)

$p = catfile($plugindir, $p); # catfile is used to create absolute path filename (from File::Spec::Functions)

eval

{

require $p; # "require" needs to be inside an eval in order to import package functions ?

$hive = $pkg->getHive(); # hive name should be UPPERCASE but could be mixed *sigh*

};

print "Error: $@\n" if ($@);

if ($hive =~ /NTUSER/i )

{

push(@ntuser_actual, $pkg);

}

elsif ($hive =~ /SOFTWARE/i )

{

push(@software_actual, $pkg);

}

elsif ($hive =~ /SAM/i )

{

push(@sam_actual, $pkg);

}

elsif ($hive =~ /SYSTEM/i )

{

push(@system_actual, $pkg);

}

elsif ($hive =~ /SECURITY/i )

{

push(@security_actual, $pkg);

}

elsif ($hive =~ /ALL/i )

{

push(@all_actual, $pkg); # some .pl plugins have "All" listed as their hive

}

}

# Calls PrintMissingPlugins to compare a Plugins text file list with an "actual" *.pl plugins list

foreach my $plugin (@pluginslist)

{

if ($plugin =~ /ntuser/)

{

PrintMissingPlugins("NTUSER", "ntuser", \@ntuser_actual, \@ntuser_plugins);

}

if ($plugin =~ /software/)

{

PrintMissingPlugins("SOFTWARE", "software", \@software_actual, \@software_plugins);

}

if ($plugin =~ /sam/)

{

PrintMissingPlugins("SAM", "sam", \@sam_actual, \@sam_plugins);

}

if ($plugin =~ /system/)

{

PrintMissingPlugins("SYSTEM", "system", \@system_actual, \@system_plugins);

}

if ($plugin =~ /security/)

{

PrintMissingPlugins("SECURITY", "security", \@security_actual, \@security_plugins);

}

if ($plugin =~ /all/)

{

PrintMissingPlugins("ALL", "all", \@all_actual, \@all_plugins);

}

}

# End Main

sub PrintMissingPlugins

{

my $hive = shift; # hive name

my $name = shift; # Plugins text file name

my $actual_plugins = shift; # reference to list of plugins derived from *.pl files

my $listed_plugins = shift; # reference to list of plugins derived from Plugins text file

my @missing_plugins; # list stores *.pl files which are NOT declared in a given Plugins text file

my @missing_pl; # list stores Plugin entries which do NOT have a corresponding .pl file

print "\nThere are ".scalar(@$actual_plugins)." $hive plugins in $plugindir\n";

# We say "lines" because there can accidental multiple declarations *sigh*

print "There are ".scalar(@$listed_plugins)." plugin lines listed in the $name Plugins file\n";

print scalar(@excludelist)." plugins are being ignored\n";

# Handle the "all" Plugin case discrepancy

# There's a large mixture of different hive plugins listed in the "all" Plugins text file

# and only a handful of plugins who actually return "All" as their hive.

# At this point, @all_actual should only contain plugins which list "All" as their hive

# In a fit of hacktacular-ness, we'll now also add the contents from the other "actual" arrays to @all_actual.

# Otherwise, when we compare the list of "All" hive plugins (@$actual_plugins) with

# the lines in the "all" Plugin (@$listed_plugins), there will be a lot of differences reported.

if ($hive eq "ALL")

{

push(@$actual_plugins, @ntuser_actual);

push(@$actual_plugins, @software_actual);

push(@$actual_plugins, @sam_actual);

push(@$actual_plugins, @system_actual);

push(@$actual_plugins, @security_actual);

}

# From here on, @all_actual / @$actual_plugins will contain a list of every processed type of .pl plugin file

# For each *.pl plugin file, check that it has a corresponding entry in the given Plugins text file

foreach my $pkg (@$actual_plugins)

{

my $res = scalar(grep(/^($pkg)$/, @$listed_plugins)); # is this .pl listed in Plugins file ?

my $ignore = scalar(grep(/^($pkg)$/, @excludelist)); # is this .pl being ignored ?

if ( ($res eq 0) and ($ignore eq 0) )

{

push(@missing_plugins, $pkg);

}

}

if (@missing_plugins)

{

print "\nThere are ".scalar(@missing_plugins)." plugins missing from the $name Plugins file:\n";

foreach my $miss (@missing_plugins)

{

print $miss."\n";

}

}

# For each line in the Plugins text file, check that it has a corresponding *.pl file

foreach my $plug (@$listed_plugins)

{

# If this Plugins entry has been commented out (by a preceding "#") OR if it starts with a whitespace (newline),

# skip to next entry so we don't get told there's no corresponding .pl file

if ( ($plug =~ /^#/) or ( not $plug =~ /^\S/ ) )

{

next;

}

my $res = scalar (grep(/^($plug)$/, @$actual_plugins)); # does this Plugin entry have a corresponding .pl file ?

my $ignore = scalar(grep(/^($plug)$/, @excludelist)); # is this Plugin entry being ignored ?

if ( ($res eq 0) and ($ignore eq 0) )

{

push(@missing_pl, $plug);

}

}

if (@missing_pl)

{

print "\nThere are ".scalar(@missing_pl)." plugins declared in the $name Plugins file with no corresponding .pl file:\n";

foreach my $miss (@missing_pl)

{

print $miss."\n";

}

}

if ( (@missing_plugins eq 0) and (@missing_pl eq 0) )

{

print "No missing plugins detected\n";

}

}

# CODE ENDS HERE

Code Summary

Here's a high level overview of the code:

- Parses command line arguments using "GetOptions"

- Prints out Help message (if required)

- Extract entries from user nominated exclude text file (if one is specified)

- Read in the entries in the specified "Plugins" text file(s)

- Reads in the *.pl plugin names from the plugins directory and store them in hive related "actual" lists

- Calls "PrintMissingPlugins" subroutine to compare the differences between a "Plugins" text file list with an "actual" *.pl plugins list. Function also allows for commented out lines (eg "# COMMENT") and blank lines in "Plugin" files.

If you have RegRipper installed, you should already have the necessary Perl packages installed (eg File::Spec::Functions).

It's not the most concise/efficient code. If in doubt, I tried to make it more readable (at least for me!). I also made more comments in the code so I wouldn't have to write a lot in this section. I think that might prove more convenient than scrolling up/down between the summary and the code.

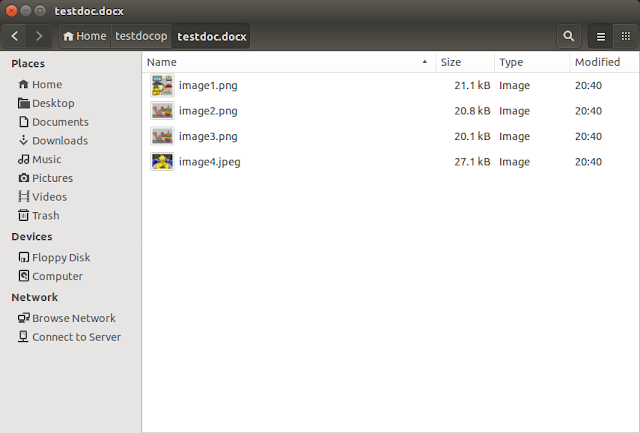

Finally, I should mention that I kept my "regripper-maint.pl" script (and my exclusion text file "regripper-maint-exclude") in "/home/sansforensics/". I didn't want the script to parse itself when looking for .pl plugin files. I suspect I could have just as easily called "regripper-maint.pl" from "/usr/local/src/regripper/". Meh.

Testing

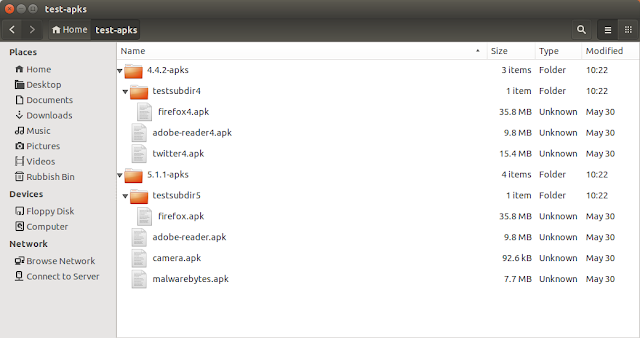

We'll start testing (on SIFT v2.12 using the default RegRipper install) with the 2 examples given in the script's Help message.

sansforensics@SIFT-Workstation:~$ ./regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -p ntuser -p sam

Running regripper-maint.pl v2012-04-04

Reading the ntuser Plugins File

Reading the sam Plugins File

There are 98 NTUSER plugins in /usr/local/src/regripper/plugins/

There are 97 plugin lines listed in the ntuser Plugins file

0 plugins are being ignored

There are 1 plugins missing from the ntuser Plugins file:

ccleaner

There are 1 SAM plugins in /usr/local/src/regripper/plugins/

There are 1 plugin lines listed in the sam Plugins file

0 plugins are being ignored

No missing plugins detected

sansforensics@SIFT-Workstation:~$

Looks OK! I have not added my "ccleaner.pl" script to the "ntuser" Plugins file so the results make sense. We can check the number of lines in the "ntuser" Plugins file using the following:

sansforensics@SIFT-Workstation:~$ wc -l /usr/local/src/regripper/plugins/ntuser

97 /usr/local/src/regripper/plugins/ntuser

sansforensics@SIFT-Workstation:~$

Just as reported, there's 97 lines in the "ntuser" Plugins file. As for the SAM results:

sansforensics@SIFT-Workstation:~$ wc -l /usr/local/src/regripper/plugins/sam

1 /usr/local/src/regripper/plugins/sam

sansforensics@SIFT-Workstation:~$

Which seems OK as there's only one "sam" Plugins entry ("samparse").

Now let's try the second help example ...

sansforensics@SIFT-Workstation:~$ ./regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -x regripper-maint-exclude -p all

Running regripper-maint.pl v2012-04-04

Ignoring the ccleaner plugin for any comparisons

Reading the all Plugins File

There are 3 ALL plugins in /usr/local/src/regripper/plugins/

There are 204 plugin lines listed in the all Plugins file

1 plugins are being ignored

There are 2 plugins missing from the all Plugins file:

winlivemail

winlivemsn

There are 2 plugins declared in the all Plugins file with no corresponding .pl file:

port_dev

wlm_cu

sansforensics@SIFT-Workstation:~$

So this example shows the script referring to our "regripper-maint-exclude" exclusion file (which has one line containing "ccleaner"). Hence, it ignores any "ccleaner" plugin comparisons.

We can also see that while only 3 "ALL" *.pl plugins were found, there are 204 lines declared in the "all" Plugins file. The "all" Plugins file is a special case in that it can contain plugins which refer to more than one type of hive. See the code comments for more info on how I dealt with this (it ain't particularly pretty).

Anyhoo, we can also see that there are 2 .pl plugins which are NOT declared in the "all" Plugins file. Open the "all" file and verify it for yourself - there is no "winlivemail" or "winlivemsn" entry.

There are also 2 entries in the "all" Plugins file which don't have corresponding .pl files (ie "port_dev.pl" and "wlm_cu.pl" do not exist). That's gonna make it a bit hard to call those plugins eh?

And here is the same example test except WITHOUT using the "regripper-maint-exclude" exception file:

sansforensics@SIFT-Workstation:~$ ./regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -p all

Running regripper-maint.pl v2012-04-04

Reading the all Plugins File

There are 3 ALL plugins in /usr/local/src/regripper/plugins/

There are 204 plugin lines listed in the all Plugins file

0 plugins are being ignored

There are 3 plugins missing from the all Plugins file:

winlivemail

winlivemsn

ccleaner

There are 2 plugins declared in the all Plugins file with no corresponding .pl file:

port_dev

wlm_cu

sansforensics@SIFT-Workstation:~$

You can see that the "ccleaner" plugin is now included in the missing plugins.

Just to prove this isn't all smoke and mirrors, here are the results for all 6 hives (with no exclusions):

sansforensics@SIFT-Workstation:~$ ./regripper-maint.pl -dir /usr/local/src/regripper/plugins/ -p ntuser -p software -p sam -p system -p security -p all

Running regripper-maint.pl v2012-04-04

Reading the ntuser Plugins File

Reading the software Plugins File

Reading the sam Plugins File

Reading the system Plugins File

Reading the security Plugins File

Reading the all Plugins File

There are 98 NTUSER plugins in /usr/local/src/regripper/plugins/

There are 97 plugin lines listed in the ntuser Plugins file

0 plugins are being ignored

There are 1 plugins missing from the ntuser Plugins file:

ccleaner

There are 54 SOFTWARE plugins in /usr/local/src/regripper/plugins/

There are 54 plugin lines listed in the software Plugins file

0 plugins are being ignored

No missing plugins detected

There are 1 SAM plugins in /usr/local/src/regripper/plugins/

There are 1 plugin lines listed in the sam Plugins file

0 plugins are being ignored

No missing plugins detected

There are 44 SYSTEM plugins in /usr/local/src/regripper/plugins/

There are 44 plugin lines listed in the system Plugins file

0 plugins are being ignored

No missing plugins detected

There are 3 SECURITY plugins in /usr/local/src/regripper/plugins/

There are 3 plugin lines listed in the security Plugins file

0 plugins are being ignored

No missing plugins detected

There are 3 ALL plugins in /usr/local/src/regripper/plugins/

There are 204 plugin lines listed in the all Plugins file

0 plugins are being ignored

There are 3 plugins missing from the all Plugins file:

winlivemail

winlivemsn

ccleaner

There are 2 plugins declared in the all Plugins file with no corresponding .pl file:

port_dev

wlm_cu

sansforensics@SIFT-Workstation:~$

I subsequently viewed the relevant Plugin files and confirmed the number of plugin lines declared matched what was printed above. I could also have used the "wc -l" trick mentioned previously as well. Meh.

Summary

Using Corey Harrell's idea, we've coded a RegRipper maintenance script which can detect discrepancies between whats declared in Plugin text files and what .pl plugins actually exist.

While this script doesn't help you if you don't use the Plugin text files, it has still been an interesting programming exercise. I can feel my Perl-fu growing stronger by the day ... muhahaha!

And for those that don't know, Cheeky4n6Monkey is now (intermittently) on Twitter (@Cheeky4n6Monkey) ... and co-incidentally(?) getting a lot less work done! Thankyou to all those who have already extended their welcome.

As always, please leave a comment if you found this post helpful / interesting / snake oil. PS I will send a copy of this script to the RegRipper folks just in case they find it useful (Thanks Brett and Francesco!).